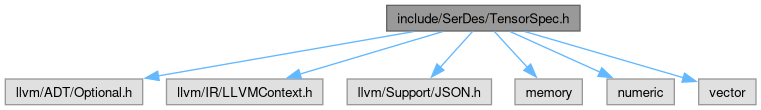

#include "llvm/ADT/Optional.h"

#include "llvm/IR/LLVMContext.h"

#include "llvm/Support/JSON.h"

#include <memory>

#include <numeric>

#include <vector>

Go to the source code of this file.

◆ _TENSOR_TYPE_ENUM_MEMBERS

| #define _TENSOR_TYPE_ENUM_MEMBERS |

( |

| _, |

|

|

| Name ) Name, |

◆ SUPPORTED_TENSOR_TYPES

| #define SUPPORTED_TENSOR_TYPES |

( |

| M | ) |

|

Value: M(float, Float) \

M(double, Double) \

M(int8_t, Int8) \

M(uint8_t, UInt8) \

M(int16_t, Int16) \

M(uint16_t, UInt16) \

M(int32_t, Int32) \

M(uint32_t, UInt32) \

M(int64_t, Int64) \

M(uint64_t, UInt64)

TensorSpec encapsulates the specification of a tensor: its dimensions, or "shape" (row-major), its type (see TensorSpec::getDataType specializations for supported types), its name and port (see "TensorFlow: Large-Scale

Machine Learning on Heterogeneous Distributed Systems", section 4.2, para 2: https://static.googleusercontent.com/media/research.google.com/en//pubs/archive/45166.pdf)

Known tensor types. The left part is the C type, the right is a name we can use to identify the type (to implement TensorSpec equality checks), and to use, if needed, when mapping to an underlying evaluator's type system. The main requirement is that the C type we use has the same size and encoding (e.g. endian-ness) as the one used by the evaluator.

Definition at line 33 of file TensorSpec.h.

◆ TFUTILS_GETDATATYPE_DEF

| #define TFUTILS_GETDATATYPE_DEF |

( |

| T, |

|

|

| Name ) template <> TensorType TensorSpec::getDataType<T>(); |