Implementation file for the abstraction of a tensor type, and JSON loading utils. More...

#include "SerDes/TensorSpec.h"#include "llvm/ADT/None.h"#include "llvm/ADT/StringExtras.h"#include "llvm/Support/Debug.h"#include "llvm/Support/JSON.h"#include <array>#include <cassert>#include <numeric>

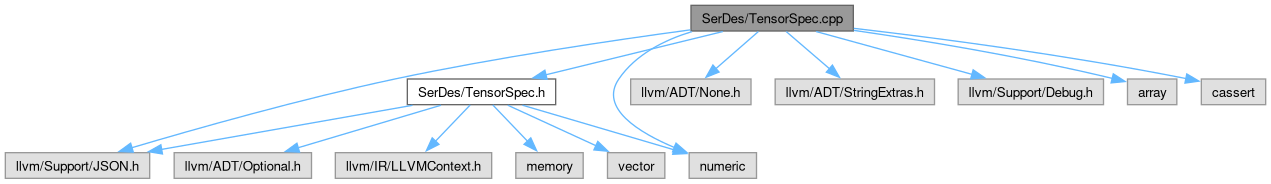

Include dependency graph for TensorSpec.cpp:

Go to the source code of this file.

Namespaces | |

| namespace | MLBridge |

Macros | |

| #define | TFUTILS_GETDATATYPE_IMPL(T, E) template <> TensorType TensorSpec::getDataType<T>() { return TensorType::E; } |

| #define | TFUTILS_GETNAME_IMPL(T, _) #T, |

| #define | PARSE_TYPE(T, E) |

| #define | _IMR_DBG_PRINTER(T, N) |

Functions | |

| StringRef | MLBridge::toString (TensorType TT) |

| llvm::Optional< TensorSpec > | MLBridge::getTensorSpecFromJSON (LLVMContext &Ctx, const json::Value &Value) |

| std::string | MLBridge::tensorValueToString (const char *Buffer, const TensorSpec &Spec) |

| For debugging. | |

Detailed Description

Implementation file for the abstraction of a tensor type, and JSON loading utils.

Definition in file TensorSpec.cpp.

Macro Definition Documentation

◆ _IMR_DBG_PRINTER

| #define _IMR_DBG_PRINTER | ( | T, | |

| N ) |

Value:

case TensorType::N: { \

const T *TypedBuff = reinterpret_cast<const T *>(Buffer); \

auto R = llvm::make_range(TypedBuff, TypedBuff + Spec.getElementCount()); \

return llvm::join( \

llvm::map_range(R, [](T V) { return std::to_string(V); }), ","); \

}

◆ PARSE_TYPE

| #define PARSE_TYPE | ( | T, | |

| E ) |

Value:

if (TensorType == #T) \

return TensorSpec::createSpec<T>(TensorName, TensorShape, TensorPort);

◆ TFUTILS_GETDATATYPE_IMPL

| #define TFUTILS_GETDATATYPE_IMPL | ( | T, | |

| E ) template <> TensorType TensorSpec::getDataType<T>() { return TensorType::E; } |

Definition at line 31 of file TensorSpec.cpp.

◆ TFUTILS_GETNAME_IMPL

| #define TFUTILS_GETNAME_IMPL | ( | T, | |

| _ ) #T, |